Data engineers collaborate with data scientists to improve data transparency and enable better decision-making processes. They also work with business and data analysts to create systems and tools to maximize the efficient use of all data sources.

Outstanding data engineers are hard to come by. Creating a great job description is your first step, and we get that writing one is about as exciting for you as it is for candidates to write their data engineer cover letters. See our examples and follow our recommendations to write an outstanding data engineer job description to attract top candidates.

Why this job description works

- When you’re advertising a technical job, you’ll want to pack plenty of information into your introductory job overview–but don’t get carried away! Keep things readable and don’t overload the reader with stuff that would be clearer in a bulleted list.

- A desirable data engineer resume should include plenty of skills and experience highlights that align with your open role. But leave room in your intro to let your brand’s personality shine and see if they jive with that, too!

- While revising your data engineer job description, analyze your tone to make sure it reflects the traits you’re looking for.

- Opportunity for growth within the company is a great selling point for your job, so mention it when possible.

- Be clear about your direction, and take a look at an applicant’s resume objective to see if they’re headed the same way.

Why this job description works

- The technical focus of your big data engineer job description is a given, so open your ad with details like company size and specific teams your new hire will work with. These key tidbits of information will help people accurately gauge whether they’re a good fit.

- Be transparent about the balance between collaboration and technical work within your job position to attract the ideal candidates: if your open role requires coordination with multiple departments, then you want a big data engineer resume that includes soft skills alongside all the tech abilities!

- While your intro may need to spotlight values like teamwork and giving back to the community, your qualifications section is the perfect spot to make technical requirements crystal clear.

- Name specific programs you’ll want to see in your ideal applicant’s resume skills section, such as Python and SQL.

Why this job description works

- Your senior data engineer job description should include active, confident wording that shows the same go-getter attitude you’re looking for in your new hire!

- Words like “pioneer,” “build,” and “leverage” are always great picks for your job ad. Look for similar wording in a senior data engineer resume that shows the applicant’s enthusiasm and proactivity.

- Your senior data engineer will naturally take on a broad role, so dividing your qualifications list into “Required” vs. “Preferred” will make things easier to digest and hint at what types of achievements and metrics matter the most.

- Clever candidates will provide quantifiable examples of their accomplishments, so emphasize any required abilities or certifications in your qualifications section.

How to Write a Winning Data Engineer Job Description z

Writing a well-crafted and highly effective job description for a data engineer leaves many scratching their heads. This is often the case when it comes to many advanced and highly technical positions. Folks in technical roles possess extensive education, skills, certifications, and experience.

When creating a job description for these types of positions, the largest challenge is typically to avoid making it too long. Keep your description focused on the most important things your data engineer must have to be successful in the role. We’ll show you how.

Refine your job description down to the crucial information

Data engineering is a difficult job requiring the completion of many complex tasks simultaneously. Data engineers deal with many other technical and non-technical resources regularly and work with complicated tech systems to handle large amounts of data. Tight time constraints, pressure to process data quickly and efficiently, the goal to provide succinct results to data scientists and others—conveying the ins and outs of that high-level view is just not easy.

So, first, aim to keep the attention of your readers. If potential candidates don’t completely read and understand your job posting, it’s unlikely they’ll apply. Ensuring the sections of your job description are brief and informative means your focus must be on only the pertinent job requirements for a data engineering role. It’s not the time to include anything extraneous.

Secondly, sell the job and your organization. The main objective of your job posting is to find the best person for your data engineering role. This means convincing them that your job opening and your company are superior to other jobs. You are trying to attract as many qualified applicants as possible. Often the best way to explain what a job is about is to provide examples, which remove vague and generic talk and give life to what it is your company actually does:

- Provide an example of a specific problem they’ll need to fix (developing a custom data pipeline that integrates with third-party systems to import data sets),

- Describe a gap they’ll fill (collaborating with data scientists to improve data feeds to the business intelligence applications), or

- Give a reason(s) why you’re specifically hiring for the role (creating a support team to maintain external and internal data APIs).

Thirdly, we cannot stress enough the need to be concise in your writing. As with any business writing, avoid extra words and don’t beat around the busy. Each word in your posting must be meaningful. Portray the important aspects of the role in as few words as possible; avoid circumlocutions (excess words that add no meaning).

Avoid discouraging diverse candidates

Too many requirements in your data engineering job description? You may, inadvertently, dissuade diverse candidates. Minimize this problem by limiting your requirements; only include the critical and unique needs of your company. Remove common data engineering skills or abilities, such as “strong data analysis skills.” Extra and/or less specific soft skills also tend to be unnecessary. Remember more words don’t mean better.

Many job descriptions are also plagued with biased wording or phrases. Unbiased writing is a skill that must be developed. It requires conscious effort to ensure your words and phrases will encourage diverse individuals to apply. Doing so has its rewards, according to Walden University’s writing center, as writing objectively and inclusively wins the “respect and trust from readers” and “[avoids] alienating” them.

Edit and proofread your content

The last step in creating a great data engineering job description is thoroughly editing and proofreading. It’s a tedious task, but take the time to carefully review and edit your work. It won’t do to misspell the tech skills required or, worse, have completely missed a necessary responsibility or skill.

We also suggest inviting as many other people as possible to review your job description. Preferably, have other data engineers (after all, they know the role best!) take a peek. You can sit on their constructive criticism for a day if it’s helpful.

You absolutely do not want to submit a job description with bad wording, grammatical and spelling errors, or inaccurate benefits or experience ranges. Spending time on careful reviews and editing will reap major benefits, save you embarrassment, and will ensure you’ve published a high-quality listing. Before you know it, outstanding data engineering resumes and applications will be flooding your inbox.

Build the Frame for Your Data Engineer Job Description

Authoring a great job description can be an intimidating task, especially if it’s a job role that’s unfamiliar. If that’s the case for you, using the examples we’ve provided is good for inspiration, but starting with a solid outline may be the best way to start:

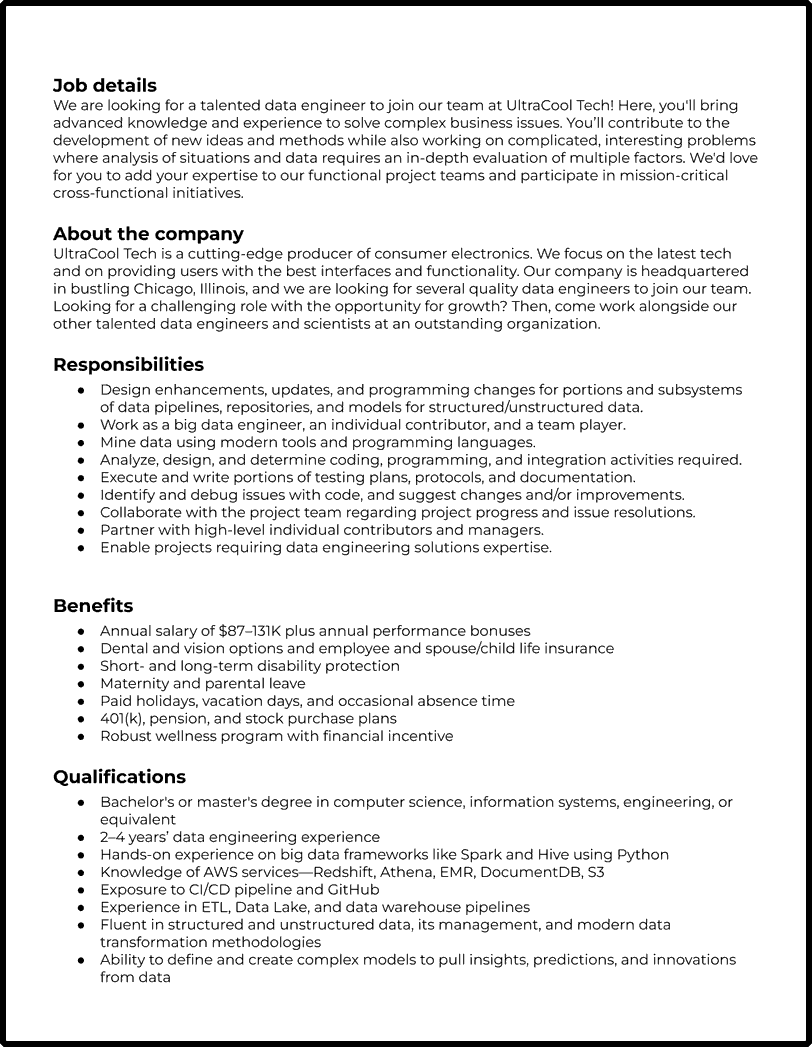

Job details

Provide a brief introduction to the company and the open position. Utilize this section to snag the reader’s attention. Offer a quick description of why your company is awesome and why they should work there. Promote the data engineering role specifically to encourage job seekers to continue reading.

About the company

This section should contain additional information about your company. A data engineering professional will want to know your company’s strategy regarding the use of big data and how they leverage data analysis. Provide a brief description of these areas and anything else important about the data engineering role. This is your opportunity to make a real impression.

What you’ll be doing

This section is also typically referred to as “Roles” or “Responsibilities” or “Requirements.” Create a bulleted list of the most important tasks. Keep it short without leaving out anything crucial or minimizing anything that makes the job attractive. Emphasize what’s unique or cool about data engineering in your company. Follow the rules of good business writing, use active verbs, avoid jargon and filler, and be clear and brief.

- Collaborate with and across Agile teams to design, develop, test, implement, and support technical solutions in full-stack development tools and technologies.

- Work with a team of developers with deep experience in machine learning, distributed microservices, and full-stack systems.

- Leverage programming languages like Java, Scala, Python, and Open Source RDBMS and NoSQL databases and Cloud-based data warehousing services such as Redshift and Snowflake.

- Support data science, data enrichment, research, and data analysis as well as making data operationally able to be consumed.

Qualifications

The list of items you require is key—this is where candidates will determine whether their background fits the job. List any educational, experience levels, and certifications required. Be certain that you include the absolute must-haves for the role.

We get that it’s challenging to keep the list of technical skills and qualifications short for highly technical jobs. As much as possible, stick with objective items. If you have soft skills that are really, really important for the role, do include those but be specific. Don’t include general attributes that just about anyone in the data engineering profession will have (i.e. analytical, detail-oriented, communication skills). Most candidates will usually have these things mentioned in their data engineering resume and/or cover letter.

- 5+ years of data engineering experience in big data solutions

- Experience working in a fast-paced and agile work environment

- Skilled at communicating and engaging with a range of stakeholders

- Hands-on development work building scalable data engineering pipelines and other data engineering/modeling work using one or more of the following: Python, Kafka, Hadoop/Hive, and Presto

- Ability to define and create complex models to pull valuable insights, predictions, and innovations from data

- Effectively and creatively tell stories and build visualizations to describe and communicate data insights

Benefits

Benefits are something you always need to list in a job description. The placement in the overall format is flexible, but don’t make it the first section. Make sure to include anything your company provides that’s exceptional or unusual, such as wellness programs or an unlimited vacation policy. If your benefits are superior, you may want to place this section early in the description to grab readers’ attention.

A Data Engineer’s Possible Roles and Responsibilities

Data engineers focus on collecting and preparing data for use by data scientists and analysts. They often work as part of an analytics team alongside data scientists and provide data in usable formats to the data scientists who run queries and algorithms against the information for predictive analytics, machine learning, and data mining applications. Data engineers also deliver aggregated data to business users, management, and analysts, so they can analyze it, apply the results, and build strategies to improve business operations.

Breaking a data engineer’s roles down to include on a job description might be overwhelming, so use the following list for some ideas:

General data engineering

- Data engineers with a general focus typically work on small teams, doing end-to-end data collection, intake, and processing.

- They have more skill with data structures but less knowledge of systems architecture. A data scientist looking to become a data engineer would fit well into the generalist role.

- A generalist data engineer for a small, food delivery service might be tasked with creating a dashboard to show the number of deliveries each day and forecast the delivery volume for the following month.

Working with different data types

- Data engineers deal with both structured and unstructured data.

- Structured data is information that can be organized into a formatted repository, like a database.

- Unstructured data (text, images, audio, and video files) doesn’t conform to conventional data models and requires different methods.

- Data engineers must understand different approaches to data architecture and applications to handle both data types.

- A variety of big data technologies, such as open-source data ingestion and processing frameworks, are also part of the data engineer’s toolkit.

Data pipeline design

- Data pipeline engineering typically involves more complicated data science projects across distributed systems.

- Midsize and large companies are more likely to need this role.

- A pipeline-centric project might be to create a tool for data scientists and analysts to search metadata for information.

Database management

- Data engineers are often tasked with implementing, building, maintaining, and populating analytics databases.

- This role typically exists at larger companies where data is distributed across multiple databases.

- Knowledge of relational database systems, such as MySQL and PostgreSQL, is quite useful to a data engineer.

- Data engineers work with pipelines, tune databases for efficient analysis, and create table schemas using extract, transform, load (ETL) methods. ETL is a process in which data is copied from numerous sources into a single destination system.

- An example project might be to design an analytics database. In addition to creating the database, the data engineer would write the code to collect data from an application database and move it into the analytics database.

Subject-matter expertise

- Data engineers are skilled in programming languages such as C#, Java, Python, R, Ruby, Scala, and SQL.

- They must understand ETL tools and REST-oriented APIs for creating and managing data integration jobs.

- Knowledge of data warehouses and data lakes and how they work is important.

- For instance, Hadoop data lakes that offload the processing and storage work of established enterprise data warehouses support the big data analytics efforts of data engineers.

- Data engineers possess skills with NoSQL and Apache Spark systems for data workflows as well as Lambda architecture, which supports unified data pipelines for batch and real-time processing.

- They have a working knowledge of Business Intelligence (BI) platforms and the ability to configure them.

- BI platforms can establish connections among data warehouses, data lakes, and other data sources.

- Data engineers need familiarity with machine learning to be able to prepare data for machine learning platforms. They should know how to deploy machine learning algorithms and gain insights from them.

- Knowledge of Unix-based operating systems (OS) is indispensable. Unix, Solaris, and Linux provide functionality and root access that other OSes don’t.

Continual learning

- As the data engineer profession has grown, companies such as IBM and Hadoop vendor Cloudera Inc. have begun offering certifications for data engineering. Some of the more popular data engineer certifications include:

- Certified Data Professional: offered by the Institute for Certification of Computing Professionals (ICCP) as part of its general database professional program.

- Cloudera Certified Professional Data Engineer: capabilities to take in, transform, store, and analyze data in Cloudera’s data tool environment.

- Google Cloud Professional Data Engineer: expertise in using machine learning models, ensuring data quality, and building and designing data processing systems.